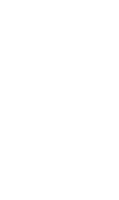

Last updated on June 2nd, 2023 at 06:53 am

Today’s post is a continuation of the previous post, ‘Artificial Intelligence – The Conversation‘. If you haven’t read it yet, please do so by clicking here. The conversation becomes more intriguing when we investigate the fears and challenges associated with the emergence of artificial intelligence.

Robomua is AI powered

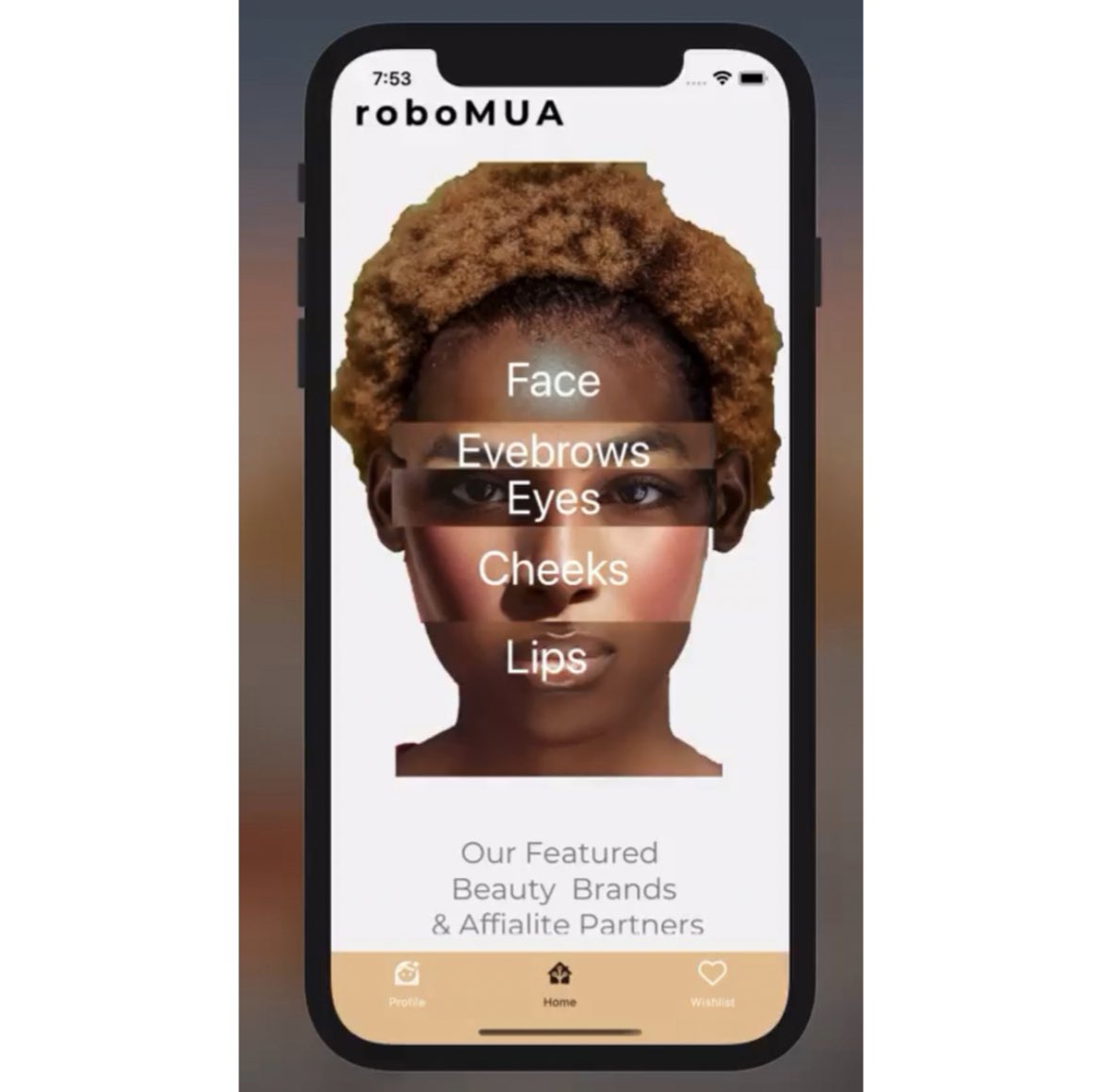

Peter: Let’s move a bit into your product. You are the founder of AI-powered software that offers beauty solutions, and your maiden application is a makeup kit that utilizes AI to determine the best colour combinations and tones for a person’s complexion. What motivated you to develop Robomua?

Emmanuel: I wanted to get into the field of AI. I love it. I liked math. That’s another conversation. I wanted to be in the field, and as I was thinking about what kind of problems we could solve with AI, I wanted to think outside the box. I didn’t want to build things that everybody else was building. So, my co-founder, who is also in the same space, said she always has huge difficulty finding the right makeup products for herself. Note that makeup is not one-size-fits-all. Essentially, what we did was create a prototype to help her find the right makeup product for herself. And since it was successful, we decided that we could apply this computer vision AI algorithm to help people find the right product so that they spend less money and can know exactly what works for them. That’s how we transitioned to that. That’s the genesis of the Robo makeup artist or Robomua.

Artificial intelligence at work

Peter: How does AI function in Robomua?

Emmanuel: We have multiple algorithms. We have computer vision algorithms to help you find your shade for foundations, skin tints, concealers, and setting powder, but we also have algorithms to help you find your shade for shapewear. If you think of Skins by Kim Kardashian or other forms of shapewear, you can find that. You simply take a picture and can identify the undertones of your particular skin shade to recommend products that are an exact fit for you. We also have other things in the pipeline, but those are things that our algorithm has done for our users so far.

Peter: Alright. With Robomua, do you need human intervention? Because if the general idea is that AI is going to take over everything, how does human intervention come in when using Robomua? Is Robomua going to just do everything for you, or do you have to come in and maybe select something? How does it work?

Emmanuel: So, we are more of an assistant. We don’t do your makeup for you. We don’t have a robot that does the makeup. We just give you helpful suggestions to make that process easier, faster, and more convenient. But for us, mainly, we help those who own beauty brands and makeup products. So, for those who are selling makeup products, it’s easier for them to incorporate our algorithms to help their users find the right products that they have made. It’s an easier process for them. It’s less about doing the makeup for the user and more about helping makeup brands find ways to make their customers’ shopping experiences more efficient.

Robomua is just an assistant

Peter: I was just about to accuse you of trying to take beauticians out of their jobs. But you’ve just…

Emmanuel: No, we partner with them. You want to make sure that their jobs are easier. We don’t want to take their jobs. We want to make their jobs easier. Of course, we want to empower them to make their jobs easier. Yeah.

Peter: OK, then why didn’t you rather create Robomua as a beauty manual instead? Why did you have to…?

Emmanuel: We are building on top of things. So eventually, it’s going to get there. We do work with beauticians. So, if they have the app and a user comes in and they are trying to find products to help their users, they can just use the app. We are not taking that process away from them. We are helping them again, empowering them to make their process easier.

Emmanuel's background

Peter: You majored in electrical engineering, right?

Emmanuel: I studied computer science and math. I switched from electrical engineering at the Kwame Nkrumah University of Science and Technology to computer science and math.

Peter: You are also a software engineer, correct?

Emmanuel: Yes.

Peter: OK. And what software do you specialize in?

Emmanuel: What do you mean by “specialize in”?

Emmanuel: Yes.

Peter: When people say they are software engineers, I’m sure there are different types of software that you can develop, in the layman’s opinion. So, is there a particular software that you…?

Emmanuel: So, you can do front-end and back-end engineering, but mostly machine learning engineering. So, it’s more about building models and deploying them, but also the software engineering on the front-end and back-end as well.

Peter: Okay. And I’m asking this because I know you’re a certified Python instructor, so I was just curious to know whether your Python background helped you with this AI thing. Or?

Emmanuel: Absolutely. Yeah. Yeah. Python helped a lot, but I do other languages as well, but Python is a huge thing. I teach Python on Coursera, but it’s Python for machine learning. It’s not like general Python. The Python that I teach is mainly aimed at doing machine learning. So, Python is needed to explore this AI space if you’re interested in the engineering aspects of it.

Is AI sentient?

Peter: You’ve been using the word machine learning. One thing that causes apprehension when it comes to AI is the mimicry of sentience. Being able to have human-like features: the AI can think, and recently there was this… I think it was fake news, but it displayed what the general apprehension out there looks like. It’s about Elon Musk developing a robot’s wife or something like that. I don’t know if you saw that on social media. You can charge the robot, who is going to be your wife; you can have sex with the robot; and so on. So that is the idea of AI having sentience. Doesn’t it scare you? How do you deal with that?

Emmanuel: I think you have to… How do you define sentience? When we spoke about this earlier, the way we train AI models is that we show them certain amounts of data and give them the answers to those data, and then eventually the AI can predict without being given that data. If you give it different sets of data, it can predict what the answers are going to be. So how do you define sentience? A lot of the things that people assume are sentience are just artificial intelligence being able to predict what the next thing should be. If you use ChatGPT, it predicts what the answers should be based on the information it has been trained on. If you see a video of somebody talking to a robot, it is because the robot is predicting what the words should be—in a sequence. So, do you define sentience as being able to predict? But the fear that I have is not for that, because if you see how AI models are trained, you see that, oh, it is math that is doing this. The calculus that people hate is what is causing this prediction to happen. It’s less of, “Oh my God, this is not a sentient being.” It’s math in application and math in motion. So, anybody who is in high school doing calculus and is like, “How am I going to apply this?” AI!!! All the elective math students who are hating it and don’t like it, AI!

That’s why my fear is not like “Oh my God, this is sentient”. Because this is just predicting what the next sequence of events will be. So it could be wrong. It could predict something bad. That is the fear that we should have—that it could predict something bad. I don’t think there’s ever going to be a fully human robot. I think human biology, the human body, and the human being are way more complex than we give them credit for. We are more complex. You have no idea. The fact that a child can learn how to speak English, Twi, or their local language is insane, right?

Chat GPT is spending billions every month or so just to allow AI to predict, and as a human being, you just sit in a class and can learn stuff. The notion of fear that I have is not that we’re going to have robots or whatever who are exactly like humans, because I think it’s close to impossible. After all, the human being is complex. The way we’re able to function—our biology, our sociology—is insane. It’s wild. Replicating that in machine form is going to be extremely difficult. So that’s not my fear of sentience, because no matter how good the algorithm is, it’s still just predicting. There’s something internal in humans that you can’t create in a robot. I fear that it’s going to make wrong predictions based on wrong information. Some algorithms can become racist. They can have a lot of biases. And that’s like my fear, right? But in terms of being sentient, it’s just predictive. If you ask anybody who builds models, they will say it’s just math—math in motion. That’s not my fear.

Responsibility and the law

Peter: OK. It’s good that you’ve actually mentioned the issue of prediction. This is something going wrong, and looking at it from the perspective that it’s gone wrong based on an algorithm or based on its predictive powers. But when it does go wrong, who takes responsibility? Well, what does the law say about this?

Emmanuel: Oooh! That’s a good question.

Peter: Because, if you noticed, when the Internet came to life and data became the new gold, we now had to develop a body that would regulate data privacy. And as part of any website’s development, every website is supposed to display its privacy policy. So when it comes to AI as well, who do we go to to get this regulation done if an AI model goes wrong or causes something that we think to be, quote, immoral or evil? Who is to take the blame?

Emmanuel: That’s a good question. I think I’m going to split it into two parts. The first part is that there should be laws regarding AI. If you look at the Internet versus when we had laws in place, I don’t remember when, but all those times, there weren’t any laws because we didn’t fully understand what the Internet was. It was just like a playground. We just played with it. Now people can hack stuff. So now we have cybersecurity laws. People can scam on the Internet. So we have scam laws. We need laws. I think at first people, especially people in government, since they’re not very technical, didn’t fully understand what to do with it. But I think now more conversations have been happening, and I think the explosion of ChatGPT is what propelled that conversation to move forward. But I think governments are starting to try to figure out what they need to do right. And I think that the other people who are more… what’s it called, more qualified to have that discussion than me because I’m not a legal person, but there should be laws for AI, and I think slowly we are going to get there. There are going to be laws for AI across continents and around the world. So definitely, there should be.

There should be laws!

However, in terms of who you blame, that’s a big, big, big question. Because if I’m a software engineer and I build the model for a company and the model makes a mistake, are you going to sue the company, the software engineer at the company, or the model itself? I guess that it will come to a time when it will be very difficult to sue algorithms because if everybody can build an algorithm and deploy it, who are you going to sue? You can’t sue the person because the person didn’t make that prediction. All the person did was show the data to the model, and the model made that prediction. So how do you sue the person? I think the legal institutions are going to have to figure out that part. That is going to be hard. That is going to be harder than creating AI laws. Who bears the responsibility? Because I train a model but don’t make the prediction the model generates. The model makes that prediction. Am I the one in charge?

Will there be a shutdown?

Peter: But that’s where the apprehension even further deepens because we are now looking at shifting responsibility and safety to a computer. So, we have two extremes where you’re announcing that, hey, I didn’t make this prediction; it was this thing that made the prediction. And as humans, we’re always trying to create better systems and do things appropriately.

If the disadvantages outweigh the advantages, then it means that there has to be some sort of shutdown of this whole AI thing. As it stands now, we’ve not yet gotten to that point because the advantages outweigh the disadvantages. But hypothetically, if you should reach a stage where things are just going wrong and things are just not as we expect them to be, would you support maybe a lockdown on AI for a while, or do you think something can be done?

AI is broader than ChatGPT

Emmanuel: It depends on your view of AI. I think a lot of times people only see the prediction part. Like they only see things like ChatGPT. But AI is broader than ChatGPT being able to answer or tell you something. It is bigger than Snapchat AI or using ChatGPT to have a conversation with you. Whenever people think of shutting down AI, those are the specific examples that they’re thinking of. But AI is broad, so if you shut it down, it means that Facebook is going to have a harder time doing content moderation because anybody can post anything, and it will be very hard for Facebook to manually detect who is posting something that is against the law and needs to be taken down. YouTube will have a hard time. The music labels are going to sue YouTube too because other people are using their music without credit or illegally.

I think it’s because of the applications that people are seeing. I think laws are needed. I don’t think there needs to be a shutdown. This is my personal opinion. My thoughts could change at any moment, but every technology is dangerous in some aspects. The Internet is a dangerous place. If you think critically about it, the Internet is dangerous. Some studies show the negative effects of the Internet on the mental health of teenagers.

Does that mean we need to shut down the Internet? I do agree that we need laws. We need strict laws. We need to take precautions as we do these things. But for a shutdown, again, using the Internet as a dangerous place, for example, does that mean we need to shut down the Internet? As you know, that is my opinion, but the field of AI is bigger than ChatGPT, and I need a lot of people to fully understand that. Because if you only think ChatGPT is the only AI or that the whole interaction with the model is the only form of AI that is available, then it’s not very accurate. I think we need to expand beyond that. We need to move with caution, but shutting down technology is not something that I think is very helpful.

What does history say?

Peter: I know you’re an enthusiastic student of history, and you usually like to compare eras and generations with one another. I want to know: have you thought about the impact of AI or some correlation in the distant past with the rise or emergence of AI now? Because you usually pinpoint certain things that happen in history and say we should learn from them, this is exactly what’s happening now. So with AI, have you had that conversation in your mind?

Emmanuel: Yeah. That’s a great line of questioning. Now everybody gets data and can go online, so they think it’s as easy as opening a website. But it’s not. We can do everything on the Internet. We can watch movies. And people take it for granted that you can take a picture and post it on social media. That is not something that used to exist, right? Now, if you want to read a book, it’s on the Internet. There’s a PDF version of that book, right? That’s super. But also, the Internet has been used to commit crimes, and it’s still being used to commit crimes. It’s used to do ridiculously horrible things as well. As I’ve been intimating all along, the comparison between the Internet and artificial intelligence is very similar. Now everybody has access to the Internet. Most parts of the world now have access to the Internet. That’s the closest comparison to artificial intelligence, and that is why we must be cautious not to get into the dark web of AI. We don’t have a dark AI coming up like we did with the Internet. If we can make AI safer, I think that would be the best option.

Advice for AI enthusiasts

Peter: Thank you very much. It’s been a wonderful discussion. I’ve learned quite a lot, and I just want to know what you have for your fellow Ghanaians. I’m putting you in the position of our president, who always uses the term ‘fellow Ghanaians’. What would be your encouragement to us? Sometimes it looks as though this is a Western philosophy and has nothing to do with Africa or, let’s say, developing countries. But I was quite impressed when I watched a debate on artificial intelligence on Doha Debates organized in Doha. One of the debaters was a data scientist from Kenya who is using AI to help track certain health issues. She also teaches other young people how to use it. So when it comes to our part of the world, what will be your word of advice or encouragement for someone who feels like doing something important? Usually, you find this in our young kids, who will be modelling different 3D objects from tin cans just to mimic what they see. What would be your word of advice for such people?

Emmanuel: So my advice would be ‘sika mpe3 dede’ (a Twi phrase meaning ‘Money doesn’t like noise”; it was used by the president of Ghana in one of his addresses to the nation).

Kwame and Peter burst out in laughter at Kwame’s comment.

Ghanaians in the AI space

Emmanuel: There are a lot of Ghanaians in the AI space. I think when we were growing up, we had limited views of what we could be, but thankfully, now so many people have broken barriers to pave the way. I know Ghanaians who are in this AI field in Ghana and who are doing amazing. Technology is just a tool, and all we need to do is tap into that tool and leverage it to solve our problems. So if you’re young, there are so many Ghanaians and so many people of African origin who are doing amazing things in the AI space. Look up to them and connect with them. Take a look at what you’re doing and see, “Hey, how can I leverage what they’ve done to build and solve problems I see in my surroundings or my communities?” And there’s also a community that you should expose yourself to. If you want to do it on your own, it’s going to be very, very difficult. So plug yourself into one of those communities. I know most of them are in Accra, but there are a few in Kumasi, and I’ve seen a couple in Takoradi and Sunyani as well. So plug yourself into a community or find a Ghanaian who’s in this space and connect with them. See how they got there and how you can leverage what they know to help you build solutions to your problems. That would be my advice.

Future conversations

Peter: Thank you so much. This discussion has been quite interesting and eye-opening, especially when you mentioned the issue of prediction versus responsibility. It’s something that I didn’t think about, but I think you’ve made a good point over there. And I also hope that you’ve calmed the fears of many of us who think AI is there to eat us and finish us off. Hopefully, we will see better things happening, and I think this whole conversation ties into transhumanism, which is the concept of humans becoming better and living better through technology. And if AI is actually on a good path, then it means that we are going to become transhuman sometime in the future. I don’t know if that’s also a Hollywood mentality that I have, but probably that is where we might head. And we are evolving. We’ve evolved ever since, and we are still evolving, so I think we shouldn’t be scared of this evolution that is going to happen. You just have to know how best you can evolve; if not, then it means that you’re going to go extinct.

Speaking of evolution, we touched on a bit of history; we would have other sessions. I would love to hear your thoughts on some other philosophical ideas and certain things in history. I think we did a similar thing on one of your posts, where I had to talk about language and transliteration. Hopefully, we’ll have more conversations on this, but I’ve enjoyed this conversation. It has been so good, and thank you for the time. I don’t know if you have any final words for us before we call it a day.

Final words

Emmanuel: Thank you so much for this opportunity. This was a dope conversation. I think my final words are, “E-math is important. You don’t have to get an A but know that the calculus that we think is ridiculous and the notion that, oh, you’re never going to apply this thing anywhere in life are lies. That’s a lie—a huge lie. So if you’re doing E-math in high school, this is for only high school students, and you are thinking, “This is boring; this is not doing anything for me; you’re going to use it for AI one day.” That would be my final words. Thank you so much for this conversation. I’d love to have historical conversations too, so let me know.

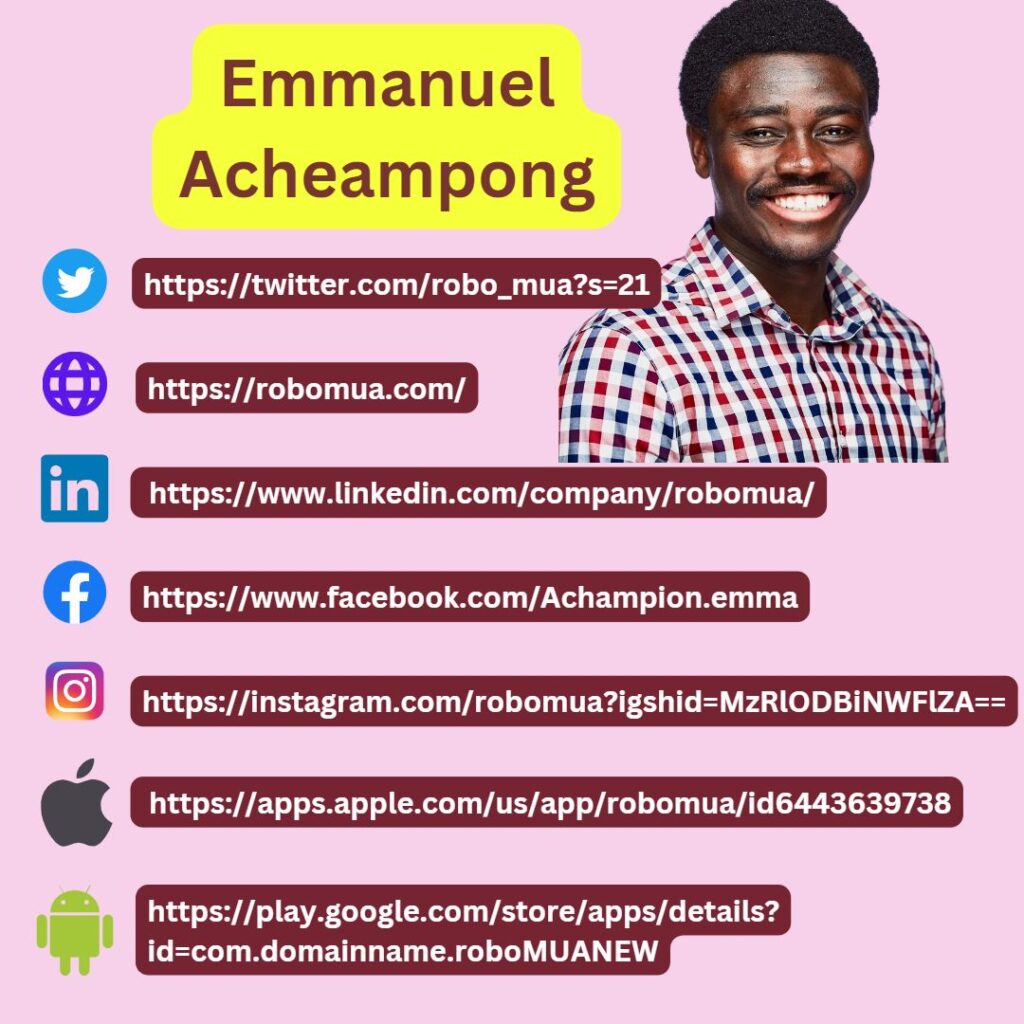

Peter: Definitely, we would. Thank you so much. Oh, I didn’t ask you to drop your handles, and if you have a website for Robomua, you can mention it, and I will put it up on our screen.

Peter: OK. Thank you. And with that, have a beautiful day. We would talk again. Thank you so much, Emmanuel.

Emmanuel: Awesome, awesome. Thank you so much for your time, too.

Peter: You are welcome.

What’s next in Peter’s Box? ¡Hasta luego amigos!